CredentialStoreManager Credentials Storage

Hi All ,

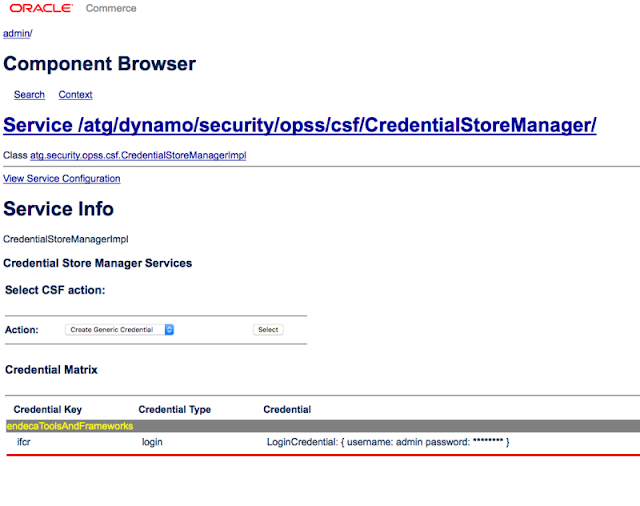

We are going to see about the Component

/atg/dynamo/security/opss/csf/CredentialStoreManager

This class makes the calls that store and retrieve credentials from the credential store. Uses the map, key and credential properties parameters. It initiates JPS, retrieves the credential store and stores credentials in the store. Also used to delete credentials from the credential store..

When Indexing is triggered from the Dyn/admin Starting from 11.2 made mandatory to Store the Workbench Password and authenticate it before Indexing .

For Configuring the Credential you can refer my previous post here .

When you look at the above Component there is JPSConfigurationLocation is defined to it .It has the path for the jps-config.xml file . If you are pointing to the file same file created in the early stages of app creation update it with the path. If you are not pointing to it then create the Credentials again and give the same value of workbench.and save it . Once if you create it , will be stored to the {atg.dynamo.home}/security/jps-config.xml following location. so going forward it will take and from read the credentials from this location unless we are not deleting it .

In Places, where we are not installing and deploy only the Big Ear , we also need to create as a one step process . Make sure that the same location is referenced all the time when changing the Ear. In my case the File is read from the following location.File C:\ATG\ATG11.3\home\security\jps-config.xml.

If you also face some error with the Creation of the Credential store you can refer my previous posts.

We are going to see about the Component

/atg/dynamo/security/opss/csf/CredentialStoreManager

This class makes the calls that store and retrieve credentials from the credential store. Uses the map, key and credential properties parameters. It initiates JPS, retrieves the credential store and stores credentials in the store. Also used to delete credentials from the credential store..

When Indexing is triggered from the Dyn/admin Starting from 11.2 made mandatory to Store the Workbench Password and authenticate it before Indexing .

For Configuring the Credential you can refer my previous post here .

When you look at the above Component there is JPSConfigurationLocation is defined to it .It has the path for the jps-config.xml file . If you are pointing to the file same file created in the early stages of app creation update it with the path. If you are not pointing to it then create the Credentials again and give the same value of workbench.and save it . Once if you create it , will be stored to the {atg.dynamo.home}/security/jps-config.xml following location. so going forward it will take and from read the credentials from this location unless we are not deleting it .

In Places, where we are not installing and deploy only the Big Ear , we also need to create as a one step process . Make sure that the same location is referenced all the time when changing the Ear. In my case the File is read from the following location.File C:\ATG\ATG11.3\home\security\jps-config.xml.

If you also face some error with the Creation of the Credential store you can refer my previous posts.